The Spring Archivematica UK User Group this year headed to Glasgow, hosted by the University of Strathclyde right in the centre of one of my favourite cities. The user group is open to anyone using or interested in Archivematica and is user led and independent of Artefactual Systems who develop and maintain the software. If you are interested do drop me a line! We do always welcome input from Artefactual – usually a dial in from Canada but this time an in person presentation from their new managing director Justin Simpson.

As the user group are not a formal body (although there are terms of reference) there is no budget for anything so we are reliant on members to host the session, so a big thank you is in order for the University of Strathclyde for their hospitality.

In time honoured tradition our hosts Victoria Peters and Alan Morrison from Strathclyde gave an update on where they are with Archivematica, respectively from an Archives and Research Data Management perspective.

Victoria ran through some of her workflows and mused about the best place in the workflow to undertake appraisal – before ingest into Archivematica or after? There was quite a bit of debate on this and generally the view was that it was “easier” to do this before ingest but would we still say this if better tools were available for ingest in Archivematica (there is some functionality but it isn’t that easy to work with)? Is this really a development we would like to see? Strathclyde also hope to begin automate more of their workflows – something I sympathise with very much. Meanwhile Alan echoed this desire for automation which could be achieved by the development of integration tools (which may come in the future) especially with regard to metadata capture. Again this was an issue that others returned to during the day.

Next up was Anna McNally from the University of Westminster who bravely (in my view) tackled the question of PREMIS rights. PREMIS rights – for those of you who can start to feel a bit seasick at the extreme metadata end of preservation are clearly explained in this excellent blog from our friends at Bentley Historical Library. Archivematica helps you enormously by automatically capturing most of the metadata required by PREMIS however you do have to create your own rights statements if you want to include it in the SIP. We had an interesting discussion around whether or not it was necessary to include a rights statement with the digital object and if you do what happens when the rights either are not clear or change? But if you decide not to include them are you creating problems for yourself further down the line? I’m definitely going to be doing some work around this as I move onto material where the rights are not as straightforward as they are for our corporate records.

Chris Grygiel from the University of Leeds gave the final presentation before lunch and returned to everyone’s favourite topic: workflows. This was the theme of the day as Fabi Barticioti from the London School of Economics also talked about theirs – in both cases very different workflows for very different kinds of material but really useful to be able to share and discuss approaches. Chris’s work on digital forensic workflows very much mirrors my own so I am looking forward to bouncing some more ideas around with him in the future. It raised the question again of how much work is done pre-ingest and how much post ingest. The answer as ever appears to be: it depends… Fabi looked at the very complex issue of transferring metadata between systems and reflected on the amount of work necessary to prepare metadata for ingest. She said that you can’t treat each collection as a special flower but then every collection is different…

Laura Giles from the University of Hull gave a really positive update form the Hull City of Culture project and her big message was that if you can get your creators/depositors to do just a small amount of work (eg sensitivity tagging) it can lead to huge benefits in the long run.

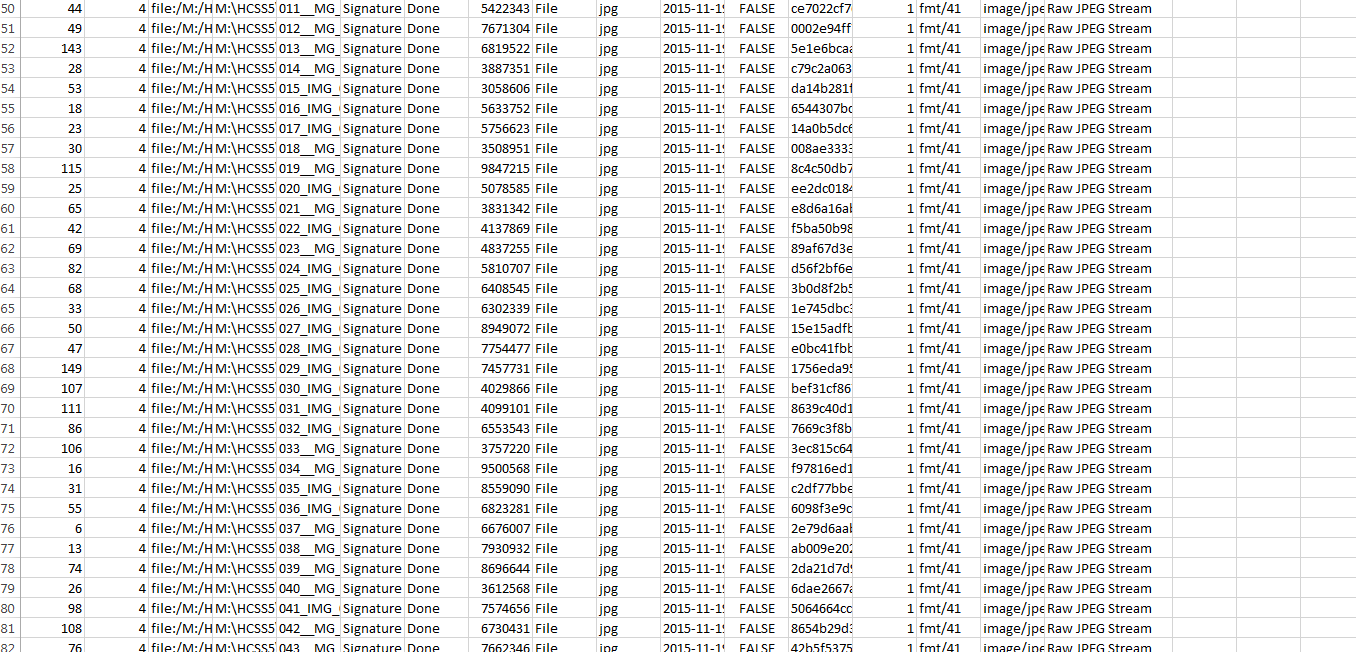

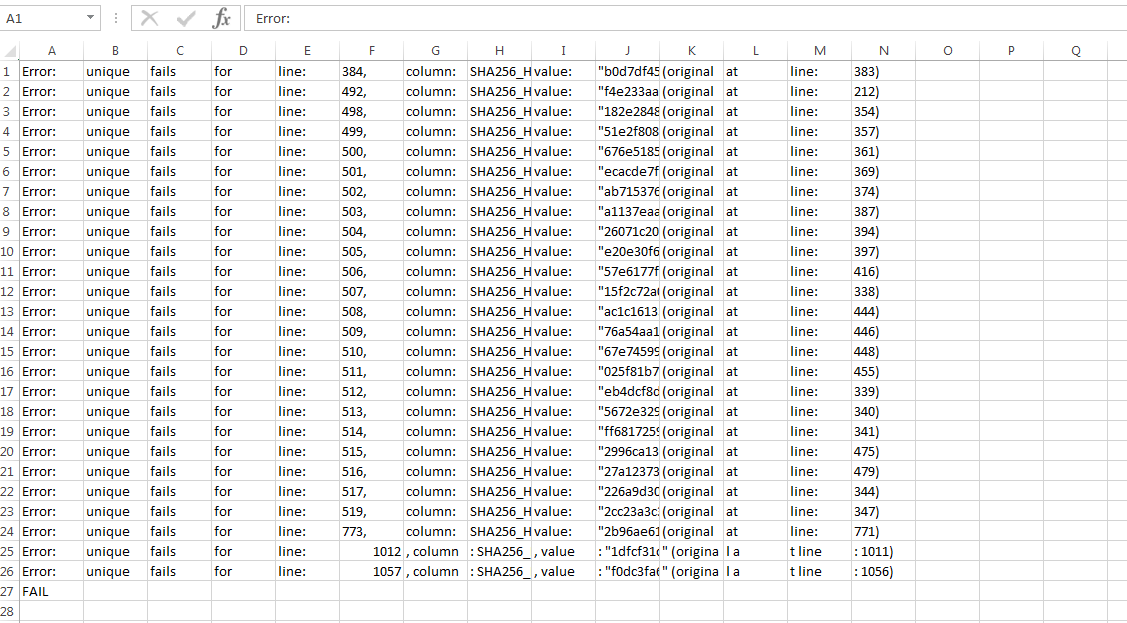

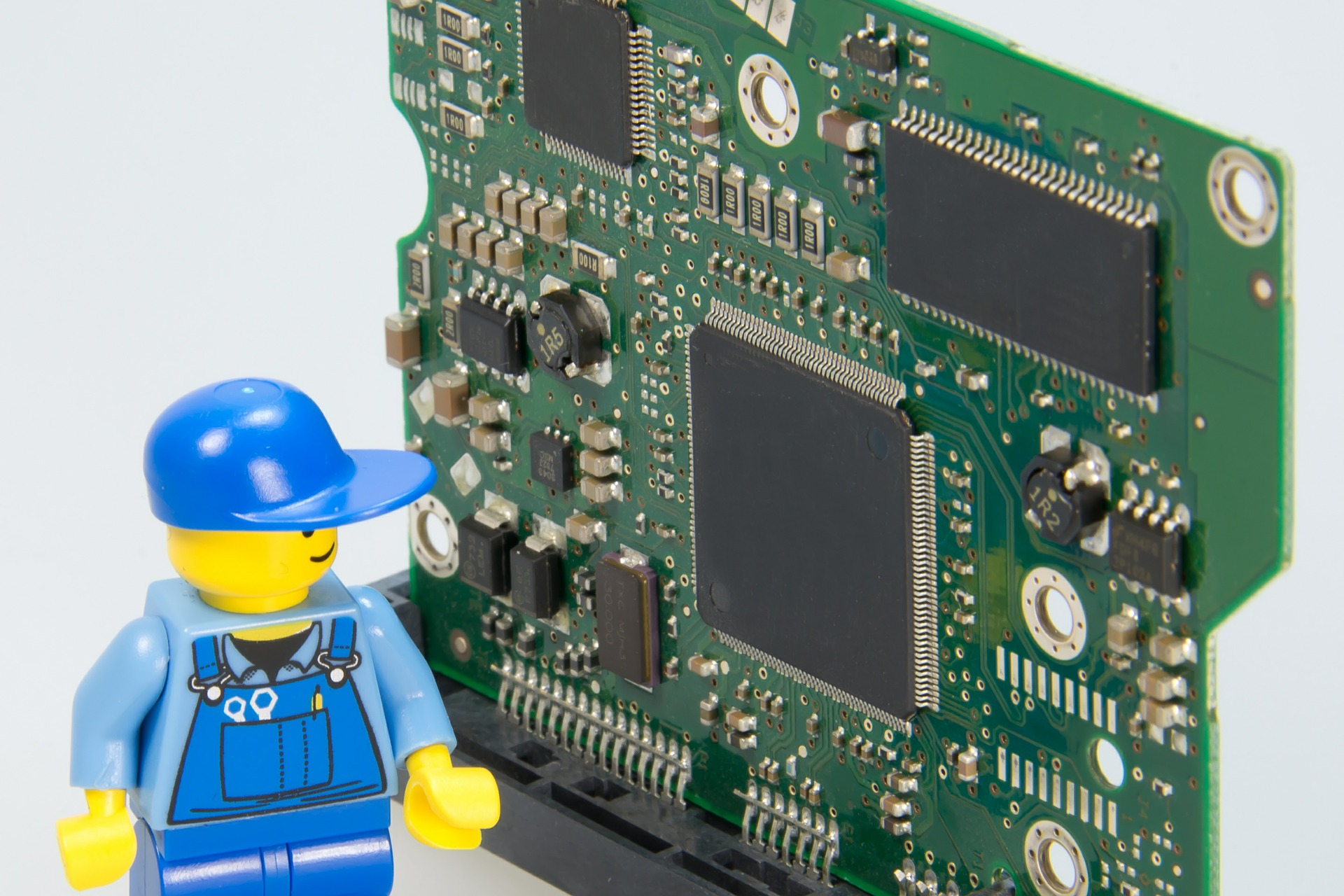

Final presentation of the day was Matthew Addis wowing us with his metadata wizardry and use of Lego figures to illustrate his talk! He had developed a really neat way of ingesting ISAD(G) metadata into Archivematica and AtoM which gave very impressive results although came with the usual health warning: you need to do lots of preflight checks. With all workflows, it doesn’t matter how neat your tools and scripts are, you still have to input the metadata, and if there are errors here it can scupper the whole thing. I wonder if there’s a use case here for the National Archives CSV validator I blogged about recently?

Finally we were really pleased to welcome Artefactual’s Managing Director Justin Simpson in person to the meeting as it coincided with a UK trip. There’s some great stuff happening at Artefactual and it’s fantastic to have the opportunity to ask questions of them directly like this.

Another brilliant Archivematica User Group meeting which was really useful as a platform to share experiences and ideas. Already looking forward to the next one!