It was great to be back at DCDC for another year – it’s always been a conference I enjoyed both in person and on line and a big thank you to RLUK, Jisc and the National Archives for hosting. For the first time in a while this year I have the prospect of an in person conference (iPres 2022 in Glasgow – can’t wait!). Reflecting on online versus in person I can see the massive benefits of online especially for those with any caring responsibilities or limited budgets (and it goes without saying that these inequities fall harder on women and people of colour). I am privileged in that I don’t have caring responsibilities which make it hard for me to get away and I work for an organisation which can afford to send me to conferences (hence getting to iPres – thank you University of Warwick!) so having the choice I do realise that the convenience of online is also (for me) its downfall in that it is too easy to schedule too many other things around it. It did mean I fitted it around work commitments and medical appointments which was both good and bad – I certainly will be using the watch again facility which DCDC has always offered – but this doesn’t allow for some of the great debate and discussion which are a highlight of any conference.

The conference got off to a great start for me with Leonie Watson on “Algorithmic Fairness and Inclusive Systems” talking about inclusive design which was thought provoking and packed with useful tips on inclusive practice including these great videos on inclusive design. One of my big take aways from Leonie’s talk was how she made the point about describing oneself at the start of a presentation (a verbal equivalent of alt-text) so I will now be beginning my presentation with a quick description of myself (including my age which was the thing people were most reluctant to say I noticed!). Since the conference Kamala Harris has created a bit of stir amongst some right wing commentators for doing this very thing (as if there weren’t enough other things going on in the world to get wound up about!).

It was also great to hear a session on digital exclusion and the flip side of how to use digital to enagage with marginalised communities. Claire Boardman from the University of York spoke about working with the communities who experience the highest levels of digital exclusion. It is a complex issue, often oversimplified as “generational lag” whereas in reality it is not only older people who are at risk of exclusion. So-called “digital natives” do not necessarily have the skills or opportunities they need – data poverty is a real issue.

In contrast in some ways Delia Keating from the National Library of Ireland talked about how having born digital material in collections as well as the act of collecting it can contribute hugely to engagement and inclusion. This really rang true from my experience as more contemporary collecting has put us in touch with people we otherwise would probably not have spoken to and has made us tackle challenges such as social media archiving which will hopefully lead to more engagement opportunities and more diverse and representative collections.

The final presentation in this session was from Graham Jevon who took a close look at crowd sourced transcription projects, in particular the British Library’s Agents of Enslavement project, part of their Endangered Archives Programme which digitised and transcribed two colonial newspapers from Barbados with the aim of foregrounding the voices of enslaved people from these sources. It was really good to hear about some of the ethical considerations of digitisation (and outreach) projects and this also featured in one of the “on demand” talks – The student voice, past and present – from the University of Salford talking about the digitisation of the records of the Student Union. It’s something we should all be giving a lot of thought to in general especially in the context of many services (our won included) working towards making their digital collections as accessible as possible; this shouldn’t be done to the detriment or disadvantage of anyone.

I had to miss a day of the conference (although I caught a little of the National Archives breakfast briefing with Rachael Minott talking about building equity into service delivery. I’m certainly going to schedule some time on catching up with the things I missed which are all available on YouTube.

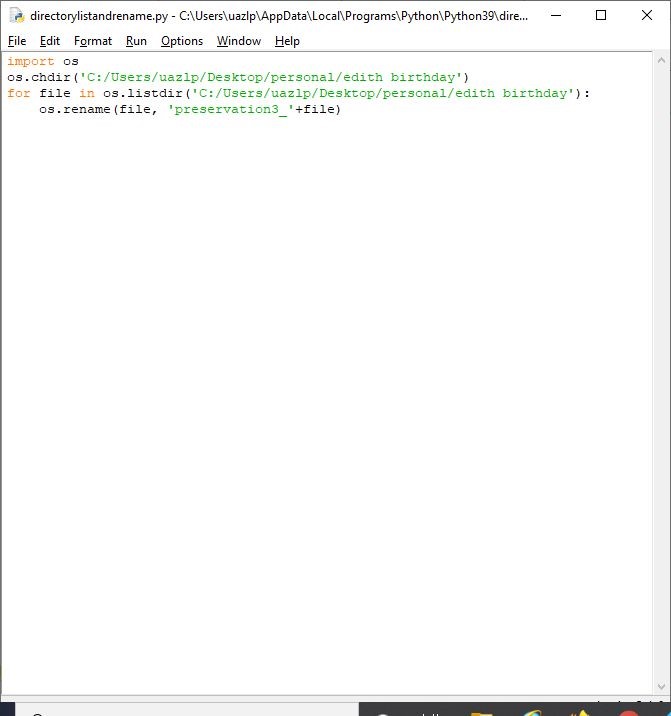

I was really pleased to be able to rejoin for with the roundtable on developing computational skills for digital collections chaired by Peter Webster. This came at a good time for me having recently attended the Digital Preservation Coalition’s launch of their beginners guide to computational access which I think will be available to everyone later in the year (currently members only). Working (as I do) with digital archives I am always aware of how there are ways of using these records in ways which were unimaginable in the past; equally computational methods offer new opportunists for archivists and curators to manage and make collections available. It was really interesting to hear from researchers who were using computational methods in their research and also from people who developed modules for the awesome resource which is The Programming Historian. I was particularly struck by Chahan Vidal-Gorène’s work on non western scripts and automated transcription as well as Yann Ryan‘s work on creating an interactive mapping plugin for visualisations using R and Shiny.

Keynote for Day Four was Mark Martin (@urban_teacher) and Patrick Towell (Audience Agency) about digital literacy and engagement. I found Mark’s presentation really inspiring and thought provoking – he urged us to examine closely the ecosystem within which we are operating and beware of creating silos when we make assumptions about people and communities and then create or target digital spaces based on these assumptions. Getting this wrong can be very dangerous and lead to misinformation and disengagement. On a very practical level Mark shared some stock images designed by UK Black Tech to help create positive images especially foregrounded Black women in technology. Representation does matter! Patrick introduced the Digitally Democratising Archives project aimed at diversifying audiences. He also got top marks from me by saying “no one has the silver bullet for digital preservation” – amen Patrick!

The final session of the day for me was Innovative practice in search and discovery with a great range of panel presentations including the team from McGill Library talking about handwritten text recognition, Jenny Bunn from the National Archives doing a grand job of demystifying AI in the cultural heritage sector and the one I must go back to which was a collaborative team from Bristol, Birmingham, Maryland and the UK National Archives presenting on EMCODIST a context based search tool for email archives:

The last day of the conference was one of the best for me – I attended a workshop on using ePADD for email preservation from the team at the John Rylands library. I’ve heard them speak about this before but it was great to have a more in depth dive into it and have the chance to have more discussion on the topic. They even mentioned my blog post on the subject which has prompted me to get back to blogging!

The conference for me was wrapped up with a Jisc-fronted session on Machine Learning, datasets and humanities research. Peter Findlay invited us to consider computers and algorithms as our new patrons which is an interesting perspective. Jane Stevenson talked about the work of Archives Hub in this are and her takeaway was: “this is hard”. Are institutions at the required level of maturity for this kind of work? I’m not sure we are…

This was a great conference with a great deal of variety and a lot of material which I still need to digest and plenty to catch up on online. I’m very grateful that the conference was made so accessible in terms of platform and also in terms of being able to catch up on sessions I couldn’t make. Whether or not I have time to do so is another matter…..